Power Query – Optimization Using Models and Dataflows

By Simon-Pierre Morin, eng. – April 2024

(beginner level)

Following the previous optimization articles, let’s take a look at some other tools at our disposal. This time, we will address the improvement of report loading performance. Note that performance improvement is independent of all the optimizations discussed previously and can be done at any time.

First, it is important to know that as a data acquisition engine, Power Query allows for several types of manipulations. However, performing these manipulations at this stage is not always the optimal solution.

Among the non-optimal operations, we have, for example:

- Some operations related to the manipulation and transformation of data.

- Some acquisitions of raw data.

- The decentralization of data.

- The redundancy of operational effort.

- Data inconsistencies caused by asynchronous maintenance.

Some of these operations are so costly that they can slow down the generation of reports. It is sometimes more optimal to execute them in the model rather than at the source. Moreover, centralizing certain operations, especially if they are reused in several reports, brings a gain in performance, maintainability, and uniformity across all reports affected by these transformations. It thus becomes interesting to share the burden of data transformation.

It is with this in mind that I present to you model manipulations and dataflows.

Processing the Model

The initial observation is that it is not necessary to do everything in Power Query. It is possible to delegate some of the work to the model using the DAX (Data Analysis Expressions) language. In Excel, you can also use formulas and tables. It would also be possible to use code (VBA or .NET), but I won’t delve that in this article.

Excel

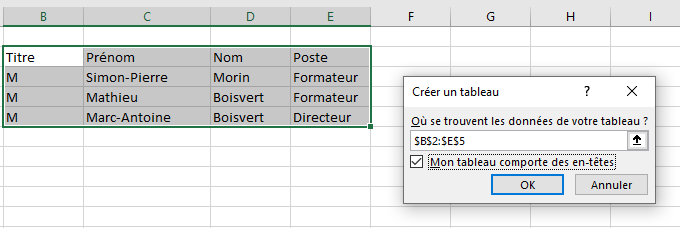

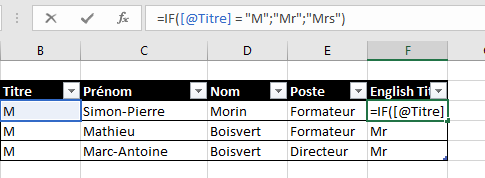

In Excel, for improved performance in your manipulations, always work with tables rather than individual cells. Tables allow for better reference management. By default, when you receive your data from Power Query or any other data source, Excel will automatically organize it into tables. You can also convert your cells into tables. (Sorry for the French screenshots.)

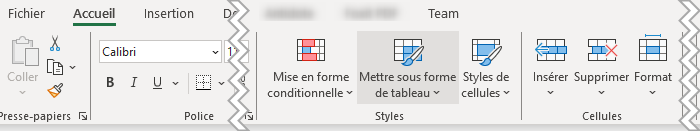

Into the “Home” ribbon, in the “Styles” sections, click the “Format as Table” button.

If your table has headers, be sure to check that options in the next window.

This will give you access to your column headers in your formulas, which will automatically apply to the entire column.

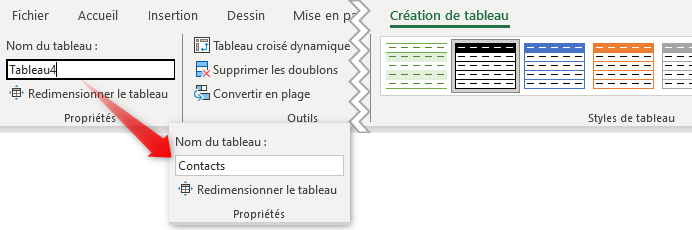

It is also recommended to always name your tables with meaningful names.

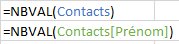

This way, you can directly refer to your table and its columns in your formulas, even if its dimensions change.

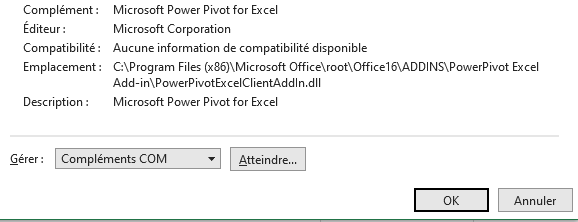

Additionally, you might want to activate Power Pivot in Excel to facilitate model manipulations and take advantage of DAX (Data Analysis Expressions). Power Pivot is already provided in Excel but is disabled by default. To enable it:

- Go to the File menu and select Options.

- In the dialog window, navigate to the Add-ins section.

- Choose COM Add-ins.

- Check the box for Microsoft Power Pivot for Excel.

This operation is optional.

DAX in General

Operations on the model or tables are more efficient for operations directly related to records or their aggregates. These calculations occur only when necessary. The rest of the time, the model will use the data set stored in memory. Some examples of operations that are better left to the model include:

- Full table copy

- Resulting table from filtering another table

- Calculated information based on another column

- Measures based on one or more filters

- Aggregate calculations

- Management of tables with hierarchy path

All these operations require minimal resources compared to the equivalent done in Power Query. It’s important to keep in mind that DAX will only calculate the data required by the filter for display, unlike Power Query, which always calculates the entire set of records for each acquisition.

For example, when making a table copy in Power Query, even if it’s internally just a reference, all the data will be loaded twice into the model during data acquisition. The same copy in DAX will load the data only once during acquisition and use the information in memory for the copy. The same principle applies to sub-tables that filter raw data or create an aggregate.

In real-world scenarios, in terms of performance gain, I have achieved results up to fifty times faster using DAX compared to its purely Power Query counterpart. Depending on the operations to be performed, the question to ask is: ‘Should the operation be done at the source to alter all the data, or is it information required for display?’ In the first case, Power Query is the ideal candidate; in the second case, it may be preferable to pass the torch to the model for performance reasons.

Dataflow

For Power BI Pro service users, there’s another valuable asset available: dataflows. These allow you to create datasets accessible across your entire workspace. Here are the key advantages of dataflows:

- Uses the same language as Power Query, making it seamless to work with.

- Data transformations are centralized within the dataflows.

- If a refresh encounters an error, it provide recovery options.

- Offers quick and efficient connections in reports.

- The connection burden is shifted to dataflows, ensuring it doesn’t impact report performance.

- Single Connection for accessing datasets from the dataflows.

- Usable as a data source in Power BI, Excel, and more.

The primary advantage lies in centralizing datasets within a single dataflow, allowing you to connect to it as if it were a conventional database. From a report perspective, the dataflow source provides access to the exposed tables. Additionally, table results are not recalculated with every refresh, resulting in an improved performance for complex queries requiring multiple transformations.

Since dataflows serve as a centralized source, any modifications made to them impact all reports using it as a data source. This also standardizes transformations across multiple reports. However, be cautious: breaking a dataflow will affect all reports relying on it.

How to Create?

First, the Data Flow editor also uses Power Query. In most situations, simply copying the M code into the dataflow editor suffices. However, there are two important points to consider:

- Some functions are not available within dataflows.

- It is not possible to have a type other than primitive tables as output.

Among unsupported functions there are third-party functions and certain proprietary functions (such as the VSTS function suite for connecting to Azure DevOps) that are not supported. Nevertheless, it is still possible to transfer the reporting burden by moving the supported part into a dataflow.

For the output data type, anything that returns a single primitive value (variable, constant, parameter), a set (list, record), or a function will be converted into a table if the query export option is enabled. Ensure that objects and anything that should not be converted into a table are not exported to keep the dataflow functional.

Also, note that only primitive types are allowed as exported values in tables. It is not possible to have tables containing records, lists, or functions. Unfortunately, that also means that you cannot use dataflows to create a centralized and shareable library of functions.

During the validation step, the engine ensures that each output is a table, each column has a defined primitive type, and columns have no errors (errors are replaced with null values).

For more detailed configuration information, refer to Microsoft’s documentation.

When should we use a dataflow?

There are several use cases for dataflows. If you need to reuse queries, host your transformations in the cloud, and simplify data modeling, you’ll want to use dataflows. However, if you primarily work on a specific report, a direct Power Query query within the report is sufficient.

Dataflows are a powerful tool for data preparation and modeling. Here are some points to consider when deciding whether to use a them instead of a direct Power Query query within the report:

- Reusability and Sharing: designed to be reusable by a large number of users. If you have queries that are part of multiple data models, it will allow for easier sharing and reuse.

- Cloud Hosting: queries are performed and hosted on the Power BI service. This centralizes data preparation and avoids duplication of efforts.

- Modeling and Performance: If you need to analyze large amounts of data and present it in reports and dashboards with relevant performance indicators, this can be more efficient. They prepare unified data for modeling and optimize performance.

- Entities and Relationships: allows mapping of entities, making it easier to access more complex data sources and establish relationships between different data sources.

The combined benefits also help share the loading burden. Since reports are separate from data transformations, report loading is very fast. Data refresh happens in the background, and a “safe” mechanism prevents a report from breaking if a source is inaccessible, providing the last successful version.

Still Curious?

The domains of DAX, Excel, Power Query, and Power BI are covered by several experts of the field. For those who would like additional examples, concrete ideas, or are simply eager to learn more, I recommend checking out the examples from Chandeep, Bas Dohmen, and the Guy in a Cube team.

If you want to delve deeper into a new topic, visit our Blog section where you’ll find several articles. This library is continually growing, so keep coming back or subscribe to our LinkedIn feed to stay informed about new content.